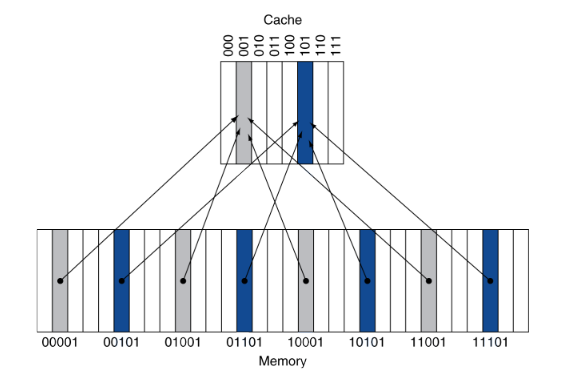

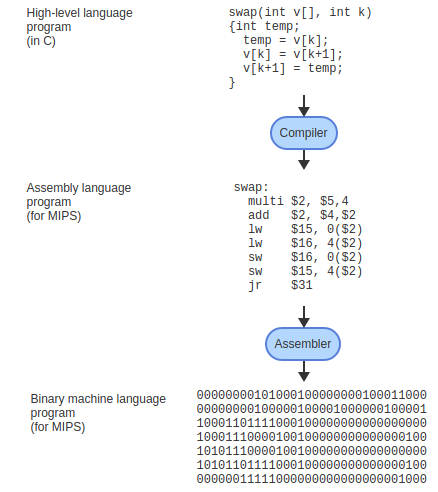

# Caches ## ...and Wrap Up --- CS 130 // 2021-12-08 <!--====================================================================--> ## Administrivia - Plan to return Quiz 5 and Assignment 5 this week - <!-- .element: class="fragment"--> Unfortunately will not have time to return Assignment 3 before the exam <!--====================================================================--> # Final Exam - Monday, December 13th - 7:30 AM to 9:20 AM - Meredith Hall 101 <!----------------------------------> ## Final Exam - **Format of the exam**: + Written, in-class, 110-minute exam + Closed textbook/notes/internet - <!-- .element: class="fragment"--> Exam will be cumulative and cover materials from the entire semester <!----------------------------------> ## Final Exam "Cheatsheet" - You may bring a one 8.5x11 sheet of paper with you to the final exam - This sheet may have notes on both sides as long as: + All notes are **handwritten** by **YOU** - <!-- .element: class="fragment"--> My hope is that thinking through what to include on the sheet and preparing it will be an effective study mechanism <!--====================================================================--> # Questions ## ...about anything? <!--====================================================================--> # Caches <!-- .slide: data-background="#004477" --> <!--====================================================================--> ## Fibonacci - Have you ever seen the following function that computes the `$n$`th Fibonacci number? ```py def fib(n): if n == 0 or n == 1: return 1 else: return fib(n-1) + fib(n-2) ``` - <!-- .element: class="fragment"--> What do you think will happen when I run this? ```py >>> fib(100) ``` - <!-- .element: class="fragment"--> Will take roughly 40 billion years on a computer running 1 trillion recursive calls per second <!----------------------------------> ## Fibonacci with a Cache - We can speed up this algorithm by using a **cache** ```py cache = {0:1, 1:1} def fib(n): if n not in cache: cache[n] = fib(n-1) + fib(n-2) return cache[n] ``` - <!-- .element: class="fragment"--> This technique is called **memoization** and can speed up recursive algorithms dramatically by caching results that have already been computed - <!-- .element: class="fragment"--> Instead of `$2^n$` recursive calls, this "memoized" implementation makes at most `$n$` recursive calls <!--====================================================================--> ## Memory Organization - When a program uses memory, it tends to use it in predictable ways just like the Fibonacci function - <!-- .element: class="fragment"--> As a result, it is possible to speed up memory usage dramatically by creating a **memory hierarchy** + <!-- .element: class="fragment"--> Register file is small but it's ridiculously fast + <!-- .element: class="fragment"--> SRAM is larger but slower + <!-- .element: class="fragment"--> DRAM is larger still but even slower + <!-- .element: class="fragment"--> Hard disks are HUGE but also the slowest <!--====================================================================--> ## Terminology - **Cache:** + An auxiliary memory from which high-speed retrieval is possible - <!-- .element: class="fragment"--> **Block:** + A minimum unit of information that can either be present or not present in the cache - <!-- .element: class="fragment"--> **Hit:** + CPU finds what it is looking for in the cache - <!-- .element: class="fragment"--> **Miss:** + CPU doesn't find what it's looking for in the cache <!--====================================================================--> ## Designing a Cache - Having a hierarchy of memories to speed up our computations is great, but we also face several design challenges - <!-- .element: class="fragment"--> If we are looking for a value in memory address `x`, how do we know if it is already in the cache? <!--====================================================================--> ## Direct Mapped Cache - One idea is to use a **direct mapped cache** where the address `$x_n$` tells us where to look in the cache - <!-- .element: class="fragment"--> If there are `n` slots in the cache, then we look for `x` in the `x % n` index of the cache <!----------------------------------> ## Direct Mapped Cache  <!----------------------------------> ## Direct Mapped Cache - If the number of blocks in the cache is `$n = 2^k$`, then `(x % n)` is simply the last `k` bits of `x` + Makes it extremely efficient to find where a block is in the cache - <!-- .element: class="fragment"--> Since multiple blocks may have the same index in the cache, how do we know if the block of memory there is the one we're looking for? + <!-- .element: class="fragment"--> Include a **tag** that uniquely identifies the block <!----------------------------------> ## Direct Mapped Cache - Some parts of the cache can be empty and/or underutilized if their index, by chance, doesn't pop up as often - <!-- .element: class="fragment"--> How can we improve utilization? <!--====================================================================--> ## Fully Associative Cache - The "extreme" alternative to direct mapped caching is **fully associative** caching + Any block can be stored in *any* index of the cache --- - <!-- .element: class="fragment"--> **Advantage**: Every spot in the cache will be used, and therefore less cache misses will occur - <!-- .element: class="fragment"--> **Disadvantage**: We need to search the entire cache every time, so the hit time will increase <!--====================================================================--> ## Set-Associative Cache - The compromise between these approaches is the **set-associative** cache + Blocks are *grouped* into sets of `n` blocks + Block number determines which set - <!-- .element: class="fragment"--> Still requires searching through all `n` blocks in a set - <!-- .element: class="fragment"--> Can be tuned to have a decent balance between hit rate and hit time <!--====================================================================--> ## Handling a Cache Miss 1. Check the cache for a memory address + Results in a miss 2. <!-- .element: class="fragment"--> Fetch corresponding block from RAM or disk + Wait for block to be retrieved (stall) + <!-- .element: class="fragment"--> Write block to cache 3. <!-- .element: class="fragment"--> Continue pipeline (unstall) <!--====================================================================--> ## Multilevel Caches - Usually, caches are implemented in multiple "levels" for maximal efficiency + <!-- .element: class="fragment"--> L1 is smallest/fastest + <!-- .element: class="fragment"--> L2 is larger/slower + <!-- .element: class="fragment"--> L3 is largest/slowest - <!-- .element: class="fragment"--> Modern multi-core processors typically have dedicated L1 and L2 caches for each core and a shared L3 cache <!--====================================================================--> # C Examples <!--====================================================================--> # Reflection <!-- .slide: data-background="#004477" --> <!--====================================================================--> ## Overarching Theme - Learning how a high-level program is actually executed on your computer's processor <!---------------------------------->  <!---------------------------------->  <!--====================================================================--> ## Learning Goals - **Digital Logic:** Basic logic and hardware gates that carry out the computations at the nanoscale - <!-- .element: class="fragment"--> **Computer Organization:** Techniques for optimizing hardware performance - <!-- .element: class="fragment"--> **Assembly Language Programming:** Coding with instructions that a computer processor can execute directly <!--====================================================================--> ## Godbolt - There is an incredibly useful, free, online tool for exploring how certain programs are compiled into assembly language instructions - <https://godbolt.org/> <!--=====================================================================--> # Evaluations - [Click Here for Evaluation](http://drake.qualtrics.com/jfe/form/SV_5j3GjCJDDm0CLVY) <!--=====================================================================--> # Exam Practice <!-- .slide: data-background="#004477" --> <!--====================================================================--> ## Jeopardy - Sadly, Alex Tredek passed away last year - <!-- .element: class="fragment"--> To honor him, we will play **Jeopardy!** <!----------------------------------> <!-- .slide: data-background="#004477" --> ## This small device acts as an on/off switch powered by electricity. Modern CPUs are composed of over 290 million of these. --- What is a **transistor**? <!-- .element: class="fragment"--> <!----------------------------------> <!-- .slide: data-background="#004477" --> ## This program is responsible for translating MIPS assembly into machine object code. --- What is the **assembler**? <!-- .element: class="fragment"--> <!----------------------------------> <!-- .slide: data-background="#004477" --> ## The following 32-bit sequence represents this decimal number when interpreted using signed two's complement. 1111 1111 1111 1111 1111 1111 1110 1000 --- What is -24? <!-- .element: class="fragment"--> <!----------------------------------> <!-- .slide: data-background="#004477" --> ## When `beq` is being executed, this ALU operation is performed on the two operands. --- What is subtraction? <!-- .element: class="fragment"--> <!----------------------------------> <!-- .slide: data-background="#004477" --> ## The C code below is equivalent to these MIPS assembly instructions. ```c // arr1 and arr2 are int arrays // arr1 is stored in register $s1 // arr2 is stored in register $s2 arr1[2] = arr1[arr2[3]] + 7; ``` --- ```mips lw $t2, 12($s2) # Loads arr2[3] into t2 sll $t2, $t2, 2 # Multiplies t2 by 4 add $t1, $s1, $t2 # Gets address of arr1[arr2[3]] lw $t1, 0($t1) # Loads arr1[arr2[3]] into t1 addi $t1, $t1, 7 # Adds 7 to it sw $t1, 8($s1) # Stores result back in arr1[2] ``` <!-- .element: class="fragment"--> <!--====================================================================--> # Other Practice Problems <!--====================================================================--> ## Exercise 1 - Consider the following MIPS code ```mips lw $t1, 16 ($t2) add $s2, $t1, $t1 ``` - Identify any hazards that are present - Assuming there is hazard detection implemented, describe how any hazards would be resolved and indicate whether any stalls (nops) are necessary - How many cycles are necessary to execute both instructions? <!--------------------------------->- ## Exercise 2 - Describe the difference between the `j` and `jal` MIPS instructions.